Part I, Introduction

David Davidian* Adjunct Lecturer

American University of Armenia September 2015

“Knowledge of the enemy’s disposition can only be obtained from other men.” Sun Tzu

Collaboration and sharing of data across the command structure continues to be a crucial factor in UAV systems. What was once a simple command console is now challenged by the number of simultaneous, in-theater UAVs, and the enormous increase in telemetrics, especially high-quality video and Synthetic Aperture RADAR (SAR) data that must be assimilated and shared for maximizing and optimizing the infrastructure’s effectiveness.

Infrastructures initially designed for the control of a single UAV and the data generated are faced with the burden of UAVs in the constant process of being upgraded, yet their ground stations remained relatively unchanged. As design engineers constantly added more and better sensor capabilities to the aircraft, their data centers became overwhelmed. Simply adding more storage and some processing power within limits of the execution architecture only provide incremental relief. The refrain, “data is all over the floor” was, and probably still heard quite often.

Specifically, the ability to extract, consolidate and synthesize metadata from these updated UAVs for future retrieval of HD video, Electro Optical Infra-Red (EO-IR), radar, geodetic, etc., data feeds into a reasonably useful database became nearly impossible. Real time video is generally stored, yet in many cases, without the capability of local processing. Post-processing is always delayed, negating much of its tactical usefulness. With multiple UAVs feeding data into an infrastructure, the architecture must be resilient and scalable enough to ingest increasingly vast amount of data, yet able to disseminate crucial actionable information throughout the command structure. In addition, this integrated infrastructure must allow collaboration not only between varied command centers, but must be able to integrate

non-UAV sourced real time intelligence into the current solution. In the words of renown Israeli UAV commander, Major Yair, “You have to make life and death calls in seconds” 1 An intercepted cell phone call could weigh heavily on an attack decision.

Decisions not made with the maximum available data and intelligence can be costly. The ability of a UAV infrastructure to maximize data availability and real time collaboration is the key. The result has many of the characteristics of a complex dynamic system, such as:

- Feedback, both system and human

- Extracting order out of otherwise chaotic-looking events

- Withstanding failures – there are no re-runs on live videos

- Generally hierarchical, somebody makes the final decision within a subordinate military structure

Situational Awareness

While it might be obvious that having live battlefield information and access to historical data (typically spanning a time frame of seconds earlier to weeks in the past) is of paramount importance in any military endeavor, wanting such capability is one thing, having it is another.

Situational awareness is knowledge created through the interaction of an agent and its environment. Awareness is knowledge bounded by time and space. As environments change, awareness, or data that results in awareness, must be maintained, kept up to date, and archived. Awareness is usually part of some other activity, rarely a goal in itself. Awareness is an everyday phenomenon, and its role becomes more noticeable as situations and environments are more dynamic, complex, and information demanding.

The creation and maintenance of situational awareness is a three-stage process (see Endsley), with the following components:

- Perception of the relevant elements of the environment

- Comprehension of those elements

- Prediction of the states of those elements in the near future

An agent acting in an environment gathers observable information, selectively attends to those elements that are most relevant for the task at hand, integrates the incoming perceptual information with existing knowledge, and make sense of it in light of the current situation. Finally, the agent should be able to anticipate changes in the environment and predict how incoming information will change. In reality, an agent and its coordinating infrastructure share these tasks.

Awareness and its dissemination is not only a cross section of constituent components, but rather a coordinated, continuously harmonized system. In the demanding case of complex organizations, it can be even multilevel, roughly viewed as a “need to know” regime. Information gathered by individual agents – according to the level and contents of cooperation between them – can overlap each other. To effectively and successfully create value greater than what individual agents collect requires an understanding of the elements of the battlefield, both physical and virtual. In cooperating organizations, shared situational awareness can be divided by spatial or functional characteristics. In the case of spatial division, overlapping parts relate to knowledge of physically adjacent regions, useful to certain levels within a command structure. In the case of functional division, overlapping parts contains object data with specific relevance to levels in a command structure. Seemingly mutually exclusive details might characterize and define the requirements of situational awareness for different command levels in a complex organization, yet individual agent-gathered data-cum-knowledge remains.

In the case of military command and control (C2), the environment is the battlefield, thus in military literature we find the terms: battlefield awareness, battle space awareness, and battle space knowledge. This can be seen in the following example.

An Example

In this simplistic example, it is of military necessary to stop a shipment of dangerous arms or weapons of mass destruction (WMD). The tracking of such material requires knowledge of air transport within a Region of Interest (ROI). To be successful in locating, disabling or destroying this shipment a combination of structured data (actual flight plans or at least a substantiated landing/take off of aircraft with the mechanical characteristics required for the actual transport) and UAV surveillance at a

minimum is required. Complex event rules could be entered into a system capable of being triggered by available data. However, constraints exists: the enemy can easily shoot down UAV surveillance craft requiring randomly spaced and timed over-flights of probable air fields, and there are a limited number of available UAVs that can be launched over enemy territory. Also, it is either not possible or desirable to preemptively destroy all runways where a suitable transport could access.

A transport has been spotted by human intelligence (HUMINT), but has not been confirmed by any available flight information, radar contact, etc. However, a local commander, Commander A, is staking his reputation on the accuracy of information that a transport has taken off, but it is not clear how current this information is. The most likely base where such a transport could land does not show a transport on the ground as viewed by normal video. If there were such a landing, a decision would most likely be made to destroy the entire warehouse and hangar. Commander A is watching a live video feed of the most likely location this transport would have landed or taken off. He sees no transport aircraft [still image]:

Figure 1. There appears no transport aircraft on the ground and no place to hide it (simulated image)

The UAV data system infrastructure allows Commander A to go back in time and review not only recorded video but EO-IR imaging. This is only possible by having the ability to store such data but have it in a form that is both retrievable and allows sharing and collaborative interpretation.

Commander A notices, 23 minutes earlier, a heat signature exists in the upper right of a data feed from a different UAV. The transport must have landed, offloaded its cargo and then taken off between UAV reconnaissance sweeps. Commander A calls a secure meeting of those in his command structure. In the process, he acquires an enhanced image of what he has found and as soon as all invited members have assembled in session, he highlights what he has found. Each session member opens their user interface (ostensibly a secure web browser) sharing the same information Commander A has extracted from the database. The commander draws a circle around the transport aircraft’s ground heat signature showing it had landed quickly, with engines likely running while dropping off its deadly cargo. Note, Commander A’s command structure has not convened in a situation room but could be anywhere on a secure data network.

Figure 2. Commander A points out, on a shared interactive session, how the transport was at the air base.

At this point in this simplistic scenario, the decision to destroy at least the warehouse at this airbase will be made.

As this command was being made, another decision maker, Commander B notices the heat signature of a jet fighter that could have accompanied the transport as it took off since a quick analysis shows that both ground heat traces are of the same relative temperature. This is seen in Figure 3, noted by the green circle. This tends to confirm the existence and importance of this stealth flight.

Figure 3. The heat signature of a jet fighter as noted by Commander B

Note the difference in agent-gathered data, how awareness knowledge was synthesized from these seemingly exclusive components, and how it was shared across a virtual command structure. In order for this integrated capability to exist, it must be designed into the system. It cannot be an afterthought. Some state-of-the-art UAV infrastructures have such capabilities. Those associated with the Israeli Aerospace Industries (ISI) have established systems and solutions offered by firms such as, for example, General Atomics, Northrop Grumman (specifically their Aerospace Systems) have attempted to address such requirements. It is not cost-effective to re-design earlier generation UAV support systems to accommodate the demands of real or near real time decision making based on real challenges associated with Processing, Exploitation, and Dissemination (PED). A dichotomy exists between extending the limits of existing systems for the goal of seamlessly ascertaining and sharing battlefield situational awareness. The former is limited as per design specifications, whereas the latter capability requires an expansive data center architecture elastic in capability.

Consider alone the amount of data that must have been categorized and made accessible while keeping in mind that there are no reruns on live video. This becomes apparent when in 2012 the US Air Force cut in half the number of active Reaper UAVs because it simply didn’t have the manpower to process the data that would have resulted from additional aircraft.

The Problem and its Solution

To make the best decision possible and initiate adequate responses to situational threats, especially in large-scale crisis, the command structure in charge must be fully aware of the current conditions, given all other physical constraints.

Situational awareness on the battlefield also allows threats and conditional changes to be perceived. Any threat has to be understood for an appropriate response to be executed successfully. The ability to understand a change in the battlefield is based on the quality and timing of the data. To perceive threats, data in the form of actionable information from different sources have to be available and relevant.

Some of this may trigger an automated complex event notice, most may not. Awareness is achieved by developing a common operational picture supporting command, operators, battle field personnel, and analysts.

Situational awareness is enhanced by a class of information referred to as Intelligence, Surveillance, and Reconnaissance (ISR). It is generally necessary to use of a number of systems that detect threats and gather tactical battlefield data. The generated information has to be shared within the coalition of response forces. As seen in the simple example above, without the ability to merge the ground heat signature of a transport being under suspicion of transporting a WMD and that of a standard video feed, out of phase and source synchronization, may not have led to conclude a transport has landed and taken off and with possible fighter escort. This ability requires a rather sophisticated capability for ingesting vast amounts of information, tagging it for later access, and making it available via a user-friendly interface.

Information dissemination in a command structure involves the need to adapt data to the task a UAV operator has to fulfill, on one end, and mission commanders on the other. Such is based on need to know and information governance. If data is needed for tactical ISR, in situ, it must be provided in real time, often directly taken from the sensor, seen by the sensor operator and perhaps the UAV operator. If data is needed for strategic ISR at command headquarters or center, for example, it is often not necessary to provide it immediately, although it could. Given these two extremes, information has to be available in different granularity. The use of a mix of sensor and information systems forms the basis for situational awareness at different command levels.

Within an integrated system, disparate technologies that complement one another are exploited and the product of the data output is essential to shared collaboration. An integrated system consists of sensors, Complex Event Processing (CEP) and external information systems, including social media. ROI that are monitored with intersections of interest to one commander may also be of interest for adjacent commands as we saw in the above example. Thus, it is necessary to share data and add information from external systems to be able to achieve enhanced situational awareness.

Dissimilar data includes (depending on the UAV), but not limited to:

- Ground Moving Target Indicator (GMTI)

- EO/IR Video

- General RADAR

- Counter-Battery RADAR

- SAR

- Electronic Warfare support Measures (ESM)

However, as part of the ground control station infrastructure, static items such as 3D mapping overlay enhancement and items that might normally not be considered of immediate tactical importance such as real-time scanning of social media and cell phone intercepts are also an important constituent of an awareness campaign. It would not be the first time where such ancillary information has aborted an offensive UAV operation, for example, in Israeli operations in Gaza.

Processing sensor data, in near real time, exploitable by ground station and command center personnel, and made available across the command structure is an enormous task. For example, the bandwidth requirements for the basic sensor operations on a non-offensive UAV typically might be:

SAR: 1m resolution, total about 10 Mb/s

GMTI @ 10m resolution: 5 Mb/s

EO/IR: over 40MB/s (est)

Images: .3m resolution, 4K HD could be upwards of 2Mb/s

Misc, vehicle status: few Kb/s

A UAV ground station designed a decade ago can barely keep up with the UAV it was designed initially to service and probably is a stovepipe operation – optimized for set of linearly executed functions. The effort to extract and create the metadata to effectively place this amount of data bandwidth into a high-performance Relational Data Base System (RDBS) is considerable. Bandwidth throttling helps with an impending overflow, but a reduction in bandwidth results in a direct drop in information density and subsequent quality. This prevents the availability of full situational awareness to decision makers as some agent data has to be tactically dismissed. Currently, there does not appear to be a military requirement for ingesting and processing 4K (or even HD) video at 30 frames/sec (broadcast quality). Measurements taken here in Yerevan, Armenia show that even at 2 frames/sec equivalent video database insertion capability (without local hardware frame interpolation) into a database is acceptable as long as multiple (such as more than 10) streams can be stored at this quality and be shared within 30 seconds to a minute after being tagged and stored.

Solutions designed from the bottom up to support both the exponential growth in sensor quality (increase in data depth and bandwidth) and the number of UAVs have adopted technologies from system architecture structures specifically designed to service multiple occurrences of the same requirements with the ability to be elastic in capability. This technology is known as Service Oriented Architectures (SOA). Interesting, the two solutions known to this author, those associated with ISI and Northrop-Grumman, use SOA architectures. The purpose of this paper is not to delve into the details of a SOA, as references on its structure are easily available on the internet. Both commercial and open-source versions of this extensive software stack exist.

A Service Oriented Architecture is an evolution of distributed computing and modular programming. SOAs build capabilities out of software services. Services are relatively large, intrinsically unassociated units of functionality. Instead of embedding calls to each other in the application stack, protocols are defined which describe how one or more services can talk to each other. This architecture then relies on a business process expert (a SOA function, not a human) to link and sequence services in a process known as orchestration, to meet a new or existing system requirement.

For example, traditionally, if multiple applications require credit check information, each application would duplicate the code required to functionally perform the credit check (or in the worst cases, use a different implementation altogether). Each new program or application modification represents an additional code base that the company’s IT team would be responsible for supporting, as well as additional overhead. In other cases, the complexity of building custom applications in-house would result in ex- pensive work that might not integrate smoothly with other existing programs.

SOA solves these problems by shifting internal application development practice towards the creation of re-usable components, these are the “services” noted above.

In reality, designing a SOA from the ground up rather than to retro-fit existing business functions into a new architecture has turned out to be the best method for its adoption. Thus, it is not surprising how state-of-the-art UAV data infrastructures solutions have turned to SOA to keep data “off the floor”.

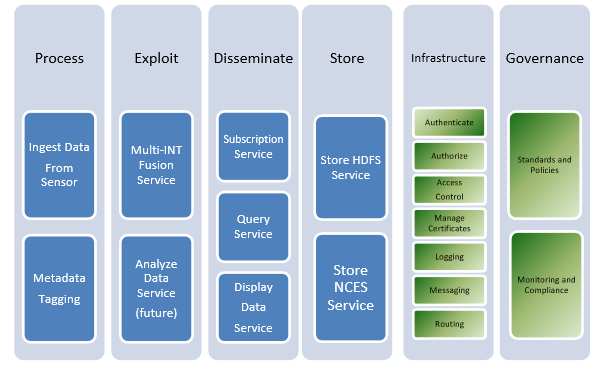

Figure 4. Northrop-Grumman’s Multi-Intelligence Distribution Architecture (MIDA) SOA MIDA is an enterprise-wide system that ingests intelligence data and provides access to the data

through MIDA web services. As a SOA, it is comprised of services that handle data ingest, processing, exploitation, dissemination, storage, subscription and query services. Some ISI system uses similar SOA functionality based partly on eDISH, Extensible Distributed Information Services Hub.

References

[1] Israeli drone commander: ‘The life and death decisions I took in Gaza’

Sources

Toward a Theory of Situational Awareness in Dynamic Systems, M. Endsley, in Human Factors 37(1) 1995

Interoperable Sharing of Data with Coalition Shared Data (CSD) Server

Multi-Intelligence Distribution Architecture

For the Armenian version see:

* Former IBM Federal Air Force System Architect, Technical Intelligence Analyst for both Sun Microsystems and IBM Federal.